In 2007, the Intergovernmental Panel on Climate Change (IPCC) issued its Fourth Assessment Report. The report included predictions of big increases in average world temperatures by 2100, resulting in an increasingly rapid loss of the world’s glaciers and ice caps, a dramatic global sea level rise that would threaten low-lying coastal areas, the spread of tropical diseases, and severe drought and floods.

These dire predictions are not, however, the result of scientific forecasting; rather, they are the opinions of experts. Expert opinion on climate change has often been wrong. For instance, a search of headlines in the New York Times found the following:

Sept. 18, 1924 MacMillan Reports Signs of New Ice Age

March 27, 1933 America in Longest Warm Spell Since 1776

May 21, 1974 Scientists Ponder Why World’s Climate Is Changing:

A Major Cooling Widely Considered to be Inevitable

Problems with Computer Models. Climate scientists now use computer models, but there is no evidence that modeling improves the accuracy of predictions. For example, according to the models, the Earth should be warmer than actual measurements show it to be. Furthermore:

- The General Circulation Models (GCMs) that are used failed to predict recent global average temperatures as accurately as fitting a simple curve to the historical data and extending it into the future.

- The models forecast greater warming at higher altitudes in the tropics, whereas the data show the greatest warming has occurred at lower altitudes and at the poles.

- Furthermore, individual models have produced widely different forecasts from the same initial conditions, and minor changes in assumptions can produce forecasts of global cooling.

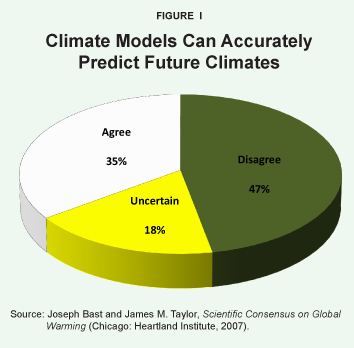

Skepticism Among the Scientists. Thus it is not surprising that international surveys of climate scientists from 27 countries in 1996 and 2003 found growing skepticism over the accuracy of climate models. Of more than 1,060 respondents, only 35 percent agreed with the statement, “Climate models can accurately predict future climates,” whereas 47 percent disagreed.

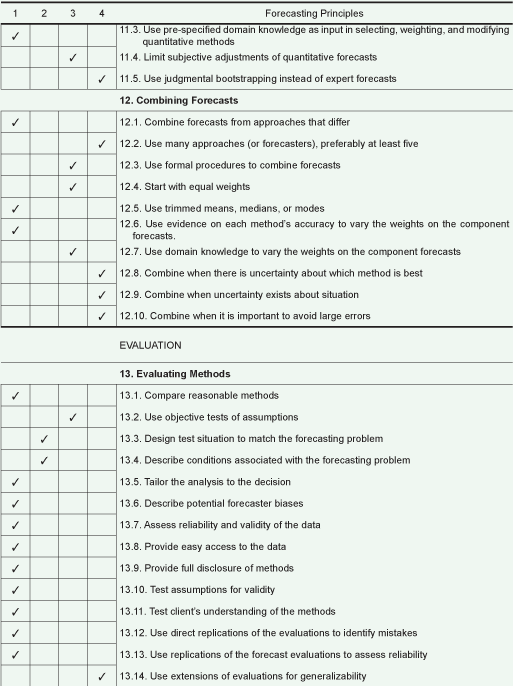

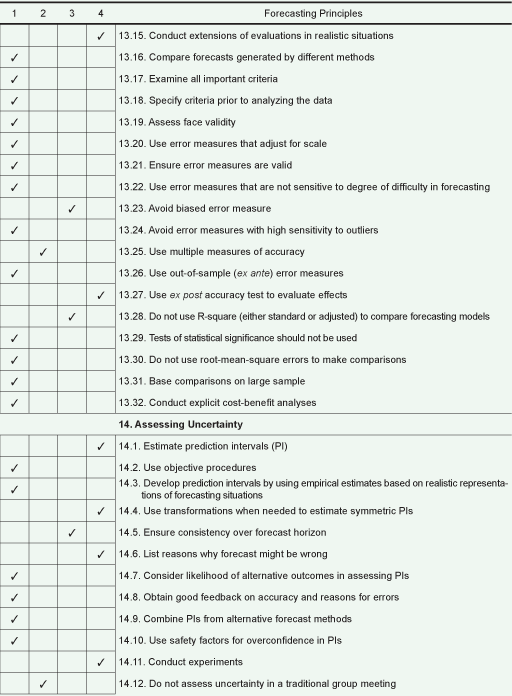

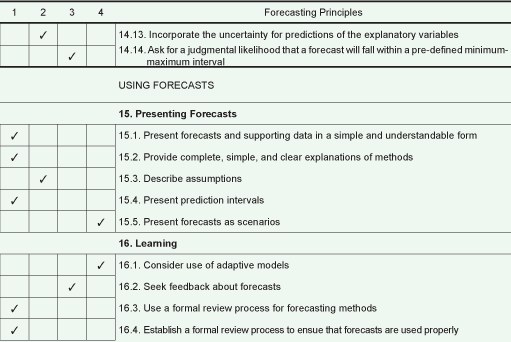

Violations of Forecasting Principles. Forty internationally-known experts on forecasting methods and 123 expert reviewers codified evidence from research on forecasting into 140 principles. The empirically-validated principles are available in the Principles of Forecasting handbook and at forecastingprinciples.com. These principles were designed to be applicable to making forecasts about diverse physical, social and economic phenomena, from weather to consumer sales, from the spread of nonnative species to investment strategy, and from decisions in war to egg-hatching rates. They were applied to predicting the 2004 U.S. presidential election outcome and provided the most accurate forecast of the two-party vote split of any published forecast, and did so well ahead of election day (see polyvote.com).

The authors of this study used these forecasting principles to audit the IPCC report. They found that:

- Out of the 140 forecasting principles, 127 principles are relevant to the procedures used to arrive at the climate projections in the IPCC report.

- Of these 127, the methods described in the report violated 60 principles.

- An additional 12 forecasting principles appear to be violated, and there is insufficient information in the report to assess the use of 38.

As a result of these violations of forecasting principles, the forecasts in the IPCC report are invalid. Specifically:

The Data Are Unreliable. Temperature data is highly variable over time and space. Local proxy data of uncertain accuracy (such as ice cores and tree rings) must be used to infer past global temperatures. Even over the period during which thermometer data have been available, readings are not evenly spread across the globe and are often subject to local warming from increasing urbanization. As a consequence, the trend over time can be rising, falling or stable depending on the data sample chosen.

The Forecasting Models Are Unreliable.Complex forecasting methods are only accurate when there is little uncertainty about the data and the situation (in this case: how the climate system works), and causal variables can be forecast accurately. These conditions do not apply to climate forecasting. For example, a simple model that projected the effects of Pacific Ocean currents (El Niño-Southern Oscillation) by extrapolating past data into the future made more accurate three-month forecasts than 11 complex models. Every model performed poorly when forecasting further ahead.

The Forecasters Themselves Are Unreliable. Political considerations influence all stages of the IPCC process. For example, chapter by chapter drafts of the Fourth Assessment Report “Summary for Policymakers” were released months in advance of the full report, and the final version of the report was expressly written to reflect the language negotiated by political appointees to the IPCC. The conclusion of the audit is that there is no scientific forecast supporting the widespread belief in dangerous human-caused “global warming.” In fact, it has yet to be demonstrated that long-term forecasting of climate is possible.

[page]More than 20 years ago, scientists began to express concern that human activities — primarily tropical deforestation and the burning of fossil fuels for energy — threaten to cause a rapid warming of the Earth by adding carbon dioxide (CO2) to the atmosphere. Recognizing the problems that global warming might cause, in 1998 the World Meteorological Organization (WMO) and the United Nations Environment Program (UNEP) established the Intergovernmental Panel on Climate Change (IPCC).2 The purpose of the IPCC was to provide a comprehensive, objective, scientific, technical and socio-economic assessment of the current understanding of human-induced climate change, its potential impacts and options for adaptation and mitigation.

“Climate change policies must be based on accurate, scientific forecasts.”

In 2007, the IPCC issued its Fourth Assessment Report. The Assessment in fact consists of three reports and a “synthesis” report. The first part was titled “The Physical Science Basis” and was authored by the IPCC’s Working Group One (WG1), a panel of experts on climate science, modeling and history. This paper focuses on the first report.3 It included predictions of dramatic increases in average world temperatures by 2100, which might in turn cause such serious environmental harms as: a global sea level rise that would threaten low-lying coastal areas, the spread of tropical diseases, an increasingly rapid loss of the world’s glaciers and ice caps, and a worsening of drought and flooding events across broad regions.4

Although the IPCC’s 1,056-page report makes these dire predictions, nowhere does it refer to empirically-validated forecasting methods, despite the fact these are conveniently available in books and articles and on Web sites. These evidence-based forecasting principles have been validated through experiment and testing and comparison to actual outcomes. The evidence shows that adherence to the principles increases forecast accuracy. This paper uses these scientific forecasting principles to ask: Are the IPCC’s forecasts a good basis for developing public policy? The answer is “no.”

Three elements are necessary for governments to make rational policy responses to climate change: Scientists must accurately predict (1) global temperature, (2) the effects of any temperature changes and (3) the effects of feasible alternative policy responses. At any step in this process, the failure to obtain a valid forecast would render forecasts at the next step in the process meaningless. This study focuses primarily, but not exclusively, on the first of the three forecasts required: obtaining long-term forecasts of global temperature. It finds that due to the unscientific method by which these forecasts were obtained, they cannot be relied upon. [See the sidebar, “Three Forecasts Required for Climate Change Policies.”]

[page]Scientific forecasting methods should be used to project climate change. The methodologies used should be those shown empirically to be relevant to the particular types of problems involved in climate forecasting. However, the evidence shows that the IPCC forecasts are instead based on opinions.

Many public-policy decisions are based on unaided expert judgments. The experts may have access to empirical studies and other information, but they often make predictions, or judgmental forecasts, without the aid of scientific forecasting principles. Research on persuasion has shown that people have substantial faith in the value of such forecasts, and this faith increases when experts agree with one another. However, the opinions of experts are an invalid basis for public policy.

“The opinions of experts are wrong as often as the opinions of nonexperts.”

Judgmental Forecasts. Comparative empirical studies have routinely concluded that judgmental forecasting by experts is the least accurate of the methods available to make forecasts.5 For example, Professor Phil Tetlock, of the University of California at Berkeley, conducted a major study in which he recruited 284 participants whose professions included, “commenting or offering advice on political and economic trends.”6 He asked these experts to forecast the probability that various events would or would not occur, picking areas (geographic and substantive) within and outside their areas of expertise. By 2003, he had accumulated over 82,000 forecasts. The experts barely outperformed nonexperts and neither group did well against simple forecasting rules that extrapolate from the past to predict the future.7

Examples of expert climate forecasts that turned out to be completely wrong are also easy to find. [See the sidebar on “Climate Forecasts Based on Expert Opinion.”]

Computer Modeling versus Scientific Forecasting. Over the past few decades, the methodology used in climate forecasting has shifted so that expert opinions are informed by computer models. Advocates of complex climate models claim that they are based on well-established laws of physics. But there is clearly much more to the models than physical laws, otherwise the models would all produce the same output, which they do not, and there would be no need for confidence estimates for model forecasts, which there certainly is. Climate models are, in effect, mathematical ways for experts to express their opinions.8

There is no empirical evidence that presenting opinions in mathematical terms rather than in words improves the accuracy of forecasts. In the 1800s, Thomas Malthus forecast mass starvation. Expressing his opinions in a mathematical model, he predicted that the food supply would increase arithmetically while the human population would grow at a geometric rate and go hungry. Mathematical models have not become much more accurate since.

“Computer modeling does not improve the accuracy of opinion.”

Two international surveys of climate scientists from 27 countries, in 1996 and 2003, show increasing skepticism over the accuracy of climate models.9 Of more than 1,060 respondents, only 35 percent agreed with the statement, “Climate models can accurately predict future climates,” whereas 47 percent disagreed. [See Figure I.]10

Problems with Climate Models. Researchers who have examined long-term climate forecasts have concluded they are based on nothing more than scientists’ opinions expressed in complex mathematical terms, without valid evidence to support the chosen approach.11

For example, when computer simulations project future global mean temperatures with twice the current level of atmospheric CO2, they assume that the temperature forecast is as accurate as a computer simulation of present temperatures with current levels of CO2.12 Yet it has never been demonstrated that temperature forecasts are as accurate as simulations of current conditions based on actual temperature data. Indeed, there are even serious questions surrounding model simulations of current temperature with current CO2 levels. According to the models, the earth should be warmer than actual measurements show it to be, which is why modelers adjust their findings to fit the data.

“Climate models based on historical data don’t accurately predict current temperatures.”

The models do not represent the real world sufficiently well to be relied upon for forecasting.13 As physicist Freeman Dyson concluded, climate models “do a very good job of describing the fluid motions of the atmosphere and the oceans,” but “they do a very poor job of describing the clouds, the dust, the chemistry and the biology of fields and farms and forests.”14

The climate models’ simulations do not correspond to past or present temperatures, rainfall patterns, tropical cyclones or plant responses. Professor Bob Carter, of the Marine Geophysical Laboratory at James Cook University in Australia, examined evidence on the predictive ability of the general circulation models (GCMs) used by the IPCC scientists. He found that while the models included some basic principles of physics, scientists had to make a number of “educated guesses” because knowledge about the physical processes of the earth’s climate is incomplete.15 Thus, in practice:

- The GCMs failed to predict recent global average temperatures as accurately as fitting a simple curve to the historical data and extending it into the future.

- The models forecast greater warming at higher altitudes in the tropics, when the greatest warming has occurred at lower altitudes and at the poles.

- Furthermore, individual models have produced widely different forecasts from the same initial conditions, and minor changes in their assumptions can produce forecasts of global cooling.16

When models predict global cooling, the forecasts are rejected by modelers as “outliers” or “obviously wrong.” This suggests that when the models are averaged together to create consensus estimates of temperature change, the results are biased due to the omission of models that show cooling.17

Researchers have found serious deficiencies in the GCMs on which the IPCC based its previous Third Assessment Report. For example, David Bellamy and Jack Barrett found: 18

- The models produced very different cloud distributions and none came close to the actual distribution of clouds, which is important because the type and distribution of clouds can either enhance or reduce the Earth’s temperature. Some clouds tend to trap more of the sun’s radiant heat, while others reflect the sun’s rays away from the Earth’s surface, mitigating the effect of increased greenhouse gases.

- Assumptions about the amount of radiation from the Sun absorbed by the atmosphere and the Earth’s surface varied considerably from model to model, yielding widely varying temperature forecasts. This is important because absorption of heat energy from sunlight is the primary mechanism of global warming.

- The models did not accurately represent the known effects of CO2, much less the uncertain possible feedbacks that reduce or enhance those effects, and as a result their climate change forecasts cannot be relied upon.

The review by Bellamy and Barrett concluded: “The climate system is a highly complex system and, to date, no computer models are sufficiently accurate for their [IPCC] predictions of future climate to be relied upon.”19 And since climate model forecasts for periods of up to five years have proven to be inaccurate, the review concluded there is little basis upon which to make accurate projections for 50 to 100 years.20

Referring to the GCMs used for the IPPC forecasts, a lead author of Chapter 3 of the Fourth Assessment Report wrote that “… the science is not done because we do not have reliable or regional predictions of climate.”21

“Climate models based on recent data can’t accurately predict temperatures five years in the future.”

Other agencies’ attempts to forecast for shorter periods and smaller geographical areas have also been unsuccessful. For instance, annual forecasts by New Zealand’s National Institute of Water and Atmospheric Research (NIWA) are no more accurate than chance.22 NIWA’s low success rate is comparable to other forecasting groups worldwide, according to New Zealand climatologist Jim Renwick, a member of the IPCC Working Group I and a coauthor of the Fourth Assessment Report. (Renwick also serves on the World Meteorological Organization Commission for Climatology Expert Team on Seasonal Forecasting.) He concludes that current GCMs are unable to predict future global climate any better than chance.23

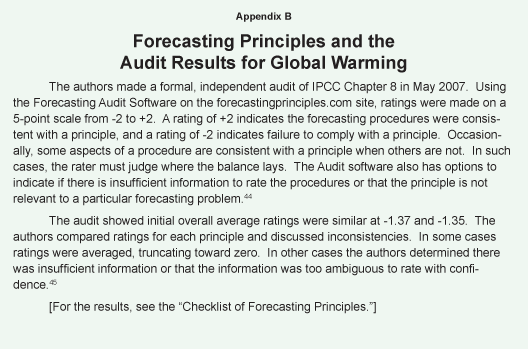

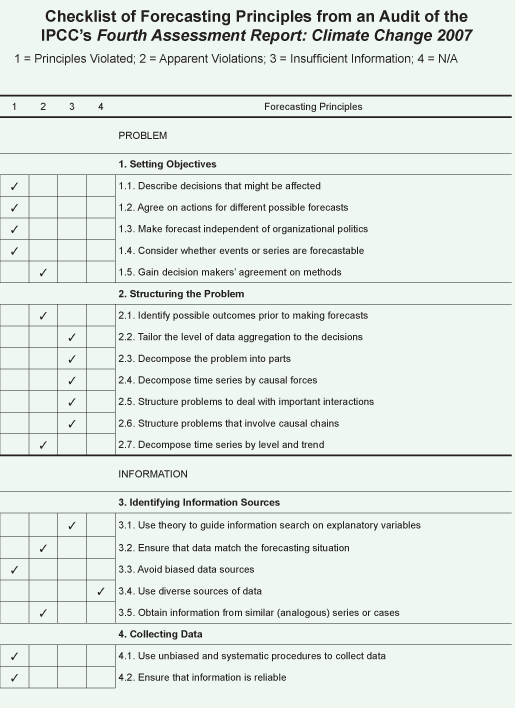

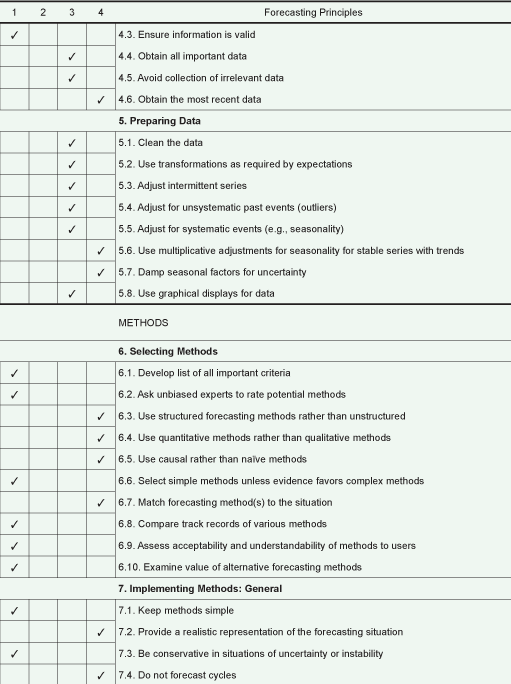

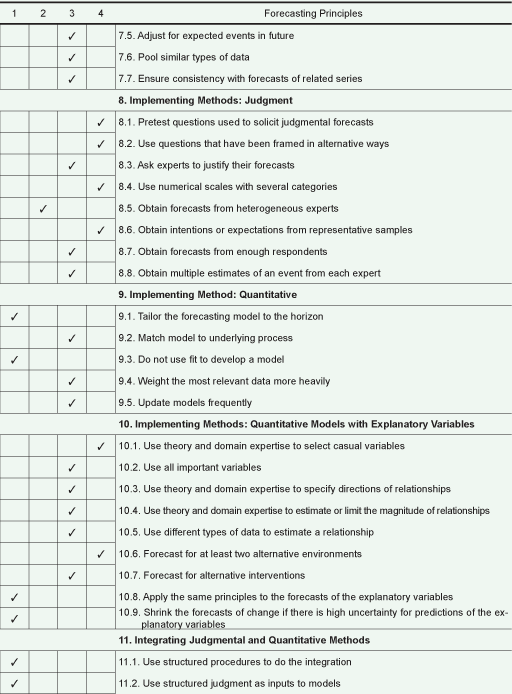

[page]To what extent have those who have made climate forecasts used scientifically tested forecasting procedures? Using forecasting audit software, the authors of this study independently assessed the extent to which IPCC procedures conformed to or violated forecasting principles.

Climate Forecasters’ Use of the Scientific Literature on Forecasting Methods.24 There is little use of forecasting principles in environmental research generally; apparently they are not used at all in climate research.25 An Internet search found no Web sites or papers on climate change that referenced forecasting methodology.26 Neither the IPCC Report’s Chapter 8, “Climate models and their evaluation,” nor any of the 788 referenced works therein, refer to forecasting methodology or established forecasting principles.27; The same was true of Chapter 9, “Understanding and attributing climate change,” and its 535 references. A survey of climate scientists (described below) did not yield references to any relevant papers on forecasting.28

Forecasting principles have been derived from all known empirical evidence on estimating the as yet unknown. The principles are therefore scientific. Evidence comes from all disciplines that have produced relevant evidence and the principles are applicable to all forecasting problems — from weather to company sales, from the spread of non-native species to investment strategy, and from war fighting to egg hatching rates. [See the sidebar on Principles of Forecasting.]

Forecasting Audit Results. Of the 140 forecasting principles, the audit found that 127 forecasting principles are relevant to the procedures used to arrive at the climate projections in the IPCC report. Of these 127, the methods described in the report definitely violate 60 principles, 12 appear to be violated and there is insufficient information to assess the use of 38.

“Official climate reports do not use scientific forecasting principles.”

For example, “Make sure forecasts are independent of politics (Principle 1.3),” is one of the 60 principles the IPCC process clearly violated. David Henderson, a former Head of Economics and Statistics at the OECD, has given a detailed account of how political considerations influence all stages of the IPCC process.29 For example, the “Summary for Policymakers” that accompanies each of the IPCC’s assessment reports is released with much media and public fanfare. The summary is written in negotiation with the explicit input of legislators, policymakers and/or diplomatic appointees. Most recently, chapter by chapter drafts of the Fourth Assessment Report’s “Summary for Policymakers” were released months in advance of the final version of the full report, with the directive that the final version of the chapters in the report be expressly written to reflect the language negotiated by the lead authors with the participating political appointees to the IPCC.

[page]Some principles are so important that any forecasting process that does not adhere to them cannot produce valid forecasts. The following are three such principles, all of which are based on strong empirical evidence, and all of which were violated by the forecasting procedures described in Chapter 8 of the IPCC report.

Principle: Consider whether the events or series can be forecasted. This principle refers to whether a forecast would likely be more accurate than assuming things will not change. Predicting no change is to use a naïve forecasting method. It is appropriate to use a naïve method when knowledge is poor and uncertainty is high, as with climate.30 There is even controversy among climate scientists over something as basic as the current trend with respect to temperature. Temperature data is highly variable and cyclical, and proxy data (such as ice cores and tree rings) must be used to infer temperatures more than a few decades past. Whether a trend over time is determined to be rising, falling or stable depends largely on the beginning and endpoint chosen.31

Global climate is complex and scientific evidence on key relationships is weak or absent. For example, the IPCC holds that CO2 plays a significant causal role in determining global temperature, but a number of studies have presented evidence that causation generally works in the opposite direction (increases in atmospheric levels of CO2 are a result of higher temperatures) and that CO2 variation plays at most a minor role in climate change.32

“Temperature data is often incomplete and unreliable.”

Measurements of key variables such as local temperatures and a representative global temperature are contentious and subject to revision. In the case of modern measurements they must be adjusted for the changing distribution of weather stations and such complicating factors as the urban-heat-island effect. The interpretation of proxy data for ancient temperatures is often speculative.33 Finally, it is difficult to forecast the causal variables — for example CO2 and cloudiness.34

Although the authors of Chapter 8 claim that the forecasts of global mean temperature are well-founded, their language is imprecise and relies heavily on such words as “generally,” “reasonably well,” “widely,” and “relatively.” These terms indicate that the IPCC forecasts are uncertain, and the chapter makes many explicit references to uncertainty.35

In discussing temperature modeling, the authors wrote, “The extent to which these systematic model errors affect a model’s response to external perturbations is unknown, but may be significant,” and, “The diurnal temperature range… is generally too small in the models, in many regions by as much as 50 percent,” and “It is not yet known why models generally underestimate the diurnal temperature range.”36

Given the high uncertainty regarding climate, the appropriate naïve method for this situation would be the “no change” model since prior evidence suggests that attempts to improve upon the naïve model often increase forecast error. To reverse this conclusion, one would have to produce validated evidence in favor of alternative methods. Chapter 8 of the IPCC report does not provide this evidence. If long-term forecasting of climate is possible, it has yet to be demonstrated.

Principle: Keep forecasting methods simple. IPPC chapters and related papers leave the impression that climate forecasters believe that complex models are necessary for forecasting climate and that forecast accuracy will increase with model complexity. Complex methods involve such things as the use of a large number of variables in forecasting models and complex interactions between variables. Complex forecasting methods are only accurate when there is little uncertainty about relationships now and in the future, where the data are subject to little error, and where the causal variables can be accurately forecast. These conditions do not apply to climate forecasting. Thus, simple methods are recommended.

“The models are complex and produce conflicting results.”

The use of complex models when uncertainty is high conflicts with the evidence from forecasting research.37 For example, scientists Halmar Halide and Peter Ridd compared predictions of El Niño-Southern Oscillation events from a simple extrapolation of the time series with those from other researchers’ complex models.38 Some of the complex models were dynamic causal models incorporating laws of physics. In other words, they were similar to those upon which the IPCC authors depended. The simple model made more accurate three-month predictions than all 11 of the complex models. Every model performed poorly when forecasting further ahead.

Using complex methods prevents understanding of how forecasts were derived, makes criticism difficult, and error detection unlikely.

Principle: Do not use fit to develop the model. It is unclear to what extent the models described in the IPCC report are either based on, or have been tested against, sound empirical data.39 However, some statements were made about the ability of the models to fit historical data, after tweaking their parameters. Extensive research has shown that the ability of a model to reproduce historical data has little relationship to forecast accuracy.40 Fit can be improved by making a model more complex. The typical consequence of increasing complexity to improve fit, however, is to decrease the accuracy of forecasts.

Other Audit Results. The audit also found 12 “apparent violations.” These principles are areas where the authors had concerns over the coding or did not agree that the procedures clearly violated the principle. Finally, for many of the relevant principles, there was insufficient information to make ratings. Some of these principles might be surprising to those who are not familiar with forecasting research — for example: “Use all important variables (Principle 10.2).” Others are principles that any scientific paper should be expected to address, such as: “Use objective tests of assumptions.” And others are especially important to climate forecasting, such as: “Limit subjective adjustments of quantitative forecasts.”41

The number of violations of forecasting principles found by the audit confirms the survey finding described earlier that the IPCC authors were unaware of forecasting principles.42 Had they been aware of the principles, it would have been incumbent on them to present evidence to justify their departures from them. They did not do so. Because the forecasting processes examined in Chapter 8 overlook scientific evidence on forecasting, the IPCC forecasts of climate change are not scientific.

Climate change forecasters should use the readily available Forecasting Audit program to ensure that they are using appropriate forecasting procedures. Outside evaluators should also be encouraged to conduct audits. These reports should be made available to both study sponsors and the public by posting on an open Web site such as publicpolicyforecasting.com.

[page]Three elements are necessary for governments to make rational policies in response to climate change: Scientists must accurately predict (1) global temperature changes, (2) the effects of any temperature changes and (3) the effects of feasible alternative policy responses. To justify policy changes, governments need scientific forecasts for all three forecasting problems and they need those forecasts to show net benefits flowing from proposed policies. If governments implement policy changes without such justification, they are likely to cause harm.

This paper has shown that failure occurs with the first forecasting problem: predicting temperature over the long term. Specifically, no scientific forecast supports the widespread belief in dangerous human-caused “global warming.” Climate is complex and there is much uncertainty about causal relationships and data. Prior research on forecasting suggests that in such situations a naïve (no change) forecast would be superior to current predictions. Note that recommending the naïve forecast does not mean that climate will not change. It means that current knowledge about climate is insufficient to make useful long-term forecasts about climate. Policy proposals should be assessed on that basis.

Many policies have been proposed in association with claims of global warming. This paper does not purport to comment on specific policy proposals, but it should be noted that some policies may be valid regardless of future climate changes. To assess this, it would be necessary to directly forecast costs and benefits that assume: (1) that climate does not change and (2) that climate changes in a variety of ways.

The evidence shows that those forecasting long-term climate change have limited or no apparent knowledge of evidence-based forecasting methods; therefore, similar conclusions apply to the second two elements of the forecasting problem. Public policy makers owe it to the people who would be affected by their policies to base them on scientific forecasts. Advocates of policy changes have a similar obligation. Hopefully, climate scientists with diverse views will begin to embrace forecasting principles and will collaborate with forecasting experts in order to provide policy makers with scientific climate forecasts.

NOTE: Nothing written here should be construed as necessarily reflecting the views of the National Center for Policy Analysis or as an attempt to aid or hinder the passage of any bill before Congress.

[page]- This paper is based substantially on Kesten C. Green and J. Scott Armstrong, “Global Warming: Forecasts by Scientists versus Scientific Forecasts,” Energy and Environment, Vol. 18, Nos. 7 & 8, 2007, pages 997-1,021.

- Intergovernmental Panel on Climate Change, “About IPCC,” undated. Available at http://www.ipcc.ch/about/index.htm. Access verified December 10, 2007.

- Intergovernmental Panel on Climate Change, Climate Change 2007: The Physical Science Basis (New York: Cambridge University Press, 2007). Available at http://ipcc-wg1.ucar.edu/wg1/wg1-report.html. Access verified December 10, 2007.

- Many observers have claimed the IPCC presents scenarios or projections rather than forecasts. See K. E. Trenberth, “Predictions of Climate,” Climate Feedback: The Climate Change Blog, Nature.com, June 4, 2007. Available at http://blogs.nature.com/climatefeedback/2007/06/predictions_of_climate.html. Access verified December 10, 2007. A forecast or prediction is an estimate of actual values in a future time period (for time series) or for another situation (for cross-sectional data). The terms “scenario” and “projection” appear to be used in the IPCC’s Fourth Assessment Report to indicate that it provides “conditional forecasts.” As it happens, in Chapter 8, “Climate models and their evaluation,” the report uses the word “forecast” and its derivatives 37 times, and “predict” and its derivatives 90 times. Furthermore, the majority (31 of the 51) of scientists who responded to a survey for this study agreed that the IPCC report is the most credible source of forecasts (not “scenarios” or “projections”) of global average temperature.

- W. Ascher, Forecasting: An Appraisal for Policy Makers and Planners (Baltimore, Md.: Johns Hopkins University Press, 1978).

- P.E. Tetlock, Expert Political Judgment: How Good Is It? How Can We Know? (Princeton, N.J.: Princeton University Press, 2005).

- J. Scott Armstrong, “Extrapolation for time-series and cross-sectional data,” in J. S. Armstrong, ed., Principles of Forecasting (Norwell, Mass.: Kluwer Academic Press, 2001).

- Reid Bryson, the most-cited climatologist in academic studies worldwide, said a model is “nothing more than a formal statement of how the modeler believes that the part of the world of his concern actually works.” Reid A. Bryson, “Environment, Environmentalists, and Global Change: A Skeptic’s Evaluation,” New Literary History, Vol. 24, No. 4, 1993, pages 783-795.

- Joseph Bast and James M. Taylor, Scientific Consensus on Global Warming (Chicago, Ill.: Heartland Institute, 2007). Available at http://downloads.heartland.org/20861.pdf. Access verified December 10, 2007. (It includes the responses to all questions in the 1996 and 2003 surveys by Dennis Bray and Hans von Storch as an appendix.)

- Polls show that the general public is also skeptical of the ability of climate models to accurately portray the future: 40 percent agreed that “climate change was too complex and uncertain for scientists to make useful forecasts,” while 38 percent disagreed. Paul Eccleston, “Public ‘in denial’ about climate change,” Telegraph.co.uk, March 7, 2007. Available at http://www.telegraph.co.uk/earth/main.jhtml?xml=/earth/2007/07/03/eawarm103.xml. Access verified December 10, 2007.

- Orrin H. Pilkey and Linda Pilkey-Jarvis, Useless Arithmetic: Why Environmental Scientists Can’t Predict the Future (New York, N.Y.: Columbia University Press, 2007).

- Bryson, “Environment, Environmentalists, and Global Change.”

- Numerous studies have concluded that the use of climate models for forecasting has failed. See, for example, Robert C. Balling, “Observational surface temperature records versus model predictions,” in P. J. Michaels, ed., Shattered Consensus: The True State of Global Warming (Lanham, Md.: Rowman & Littlefield, 2005), pages 50-71; John Christy, “Temperature Changes in the Bulk Atmosphere: Beyond the IPCC,” in Michaels, Shattered Consensus, pages 72-105; Oliver W. Frauenfeld, “Predictive Skill of the El Nino-Southern Oscillation and Related Atmospheric Teleconnections,” in Michaels, Shattered Consensus, pages 149-182; Eric S. Posmentier and Willie Soon, “Limitations of Computer Predictions of the Effects of Carbon Dioxide on Global Temperature,” in Michaels, Shattered Consensus, pages 241-281.

- Freeman J. Dyson, “Heretical Thoughts About Science and Society,” Edge: The Third Culture, August 8, 2007. Available at http://www.edge.org/3rd_culture/dysonf07/dysonf07_index.html. Access verified December 10, 2007.

- R. M. Carter, “The myth of dangerous human-caused climate change,” The Australasian Institute of Mining and Metallurgy New Leaders Conference, Brisbane, Queensland, May 3, 2007, pages 61-74. Available at http://members.iinet.net.au/~glrmc/new_page_1.htm. Access verified December 10, 2007.

- C. Essex and R. McKitrick, Taken by Storm: The Troubled Science, Policy & Politics of Global Warming (Toronto, Ca.: Key Porter Books, 2002).

- D.A. Stainforth et al., “Uncertainty in predictions of the climate response to rising levels of greenhouse gases,” Nature, Vol. 433, 2005, pages 403-406.

- David Bellamy and Jack Barrett, “Climate stability: an inconvenient proof,” Proceedings of the Institution of Civil Engineers – Civil Engineering, Vol.160, May 2007, pages 66-72.

- Ibid., page 72.

- Noted by former Colorado State Climatologist Roger Pielke Sr. See “Interview By Marcel Crok Of Roger A. Pielke Sr.,” April 30, 2007. Available at http://tinyurl.com/2wpk29. Access verified December 10, 2007.

- Trenberth, “Predictions of climate.”

- M. Taylor, “An evaluation of NIWA’s climate predictions for May 2002 to April 2007,” Climate Science Coalition, 2007. Available at http://nzclimatescience.net/images/PDFs/climateupdateevaluationtext.pdf. Data available at http://nzclimatescience.net/images/PDFs/climateupdateevaluationcalc.xls.pdf.

- New Zealand Climate Science Coalition, “World climate predictors right only half the time,” Scoop Independent News (New Zealand), media release, June 7, 2007. Available at http://www.scoop.co.nz/stories/SC0706/S00026.htm. Access verified December 10, 2007.

- This study is the only comprehensive review of climate forecasting efforts to date, though there have been more limited reviews of some aspects of the IPCC’s forecasting principles and assessments of the actual accuracy of the forecasts made. For example, the National Defense University (NDU) forecasting process (described above) was criticized in an audit by T. R. Stewart and M. H. Glantz for lacking awareness of proper forecasting methods. The audit was hampered because the organizers of the NDU study said that the raw data had been destroyed. Judging from a Google Scholar search for citations, climate forecasters have paid little attention to that paper. T. R. Stewart and M. H. Glantz, “Expert judgment and climate forecasting: A methodological critique of ‘Climate Change to the Year 2000,’” Climate Change, Vol. 7, 1985, pages 159-183.

- Bryson, “Environment, Environmentalists, and Global Change,” pages 783-795.

- The best selling textbook on forecasting methods was referenced twice in a search for the term “global warming,” but neither citation related to the prediction of global mean temperatures. S. Makridakis, S. C. Wheelwright and R. J. Hyndman, Forecasting: Methods and Applications, 3rd ed. (Hoboken, N.J.: John Wiley, 1998).

- As discussed in the sidebar, the majority of scientists responding to our inquiries concerning what climate forecasts were most credible and how they were determined referenced Chapters 8 through 10 of the IPCC WG1 2007 report, Climate Change 2007. Available at http://ipcc-wg1.ucar.edu/wg1/wg1-report.html. Access verified December 10, 2007.

- Including Chapter 10 of the IPCC Report, Gerald A. Meehl et al., “Global Climate Projections,” in Climate Change 2007, pages 747-846. Available at http://ipcc-wg1.ucar.edu/wg1/Report/AR4WG1_Print_Ch10.pdf. Access verified December 10, 2007.

- David R. Henderson, “Governments and Climate Change Issues: The Case for Rethinking,” World Economics, Vol. 8, No.2, 2007, pages 183-228. Available at http://forecastingprinciples.com/Public_Policy/Henderson2007paper.pdf. Access verified December 10, 2007.

- Freeman Dyson wrote that “The real world is muddy and messy and full of things that we do not yet understand.” Dyson, “Heretical Thoughts About Science and Society.”

- Accurate direct measurements of tropospheric global average temperature have only been available since 1979, and they show no evidence for greenhouse warming. Surface thermometer data, though flawed, show temperature stasis since 1998. Carter, “The myth of dangerous human-caused climate change.”

- H. Le Treut et al., “Historical Overview of Climate Change,” in IPCC, Climate Change 2007. Available at http://ipcc-wg1.ucar.edu/wg1/Report/AR4WG1_Print_Ch01.pdf. Access verified December 10, 2007. See also, Willie Soon, “Implications of the secondary role of carbon dioxide and methane forcing in climate change: Past, present and future,” Physical Geography, July 4, 2007. Available at http://arxiv.org/ftp/arxiv/papers/0707/0707.1276.pdf. Access verified December 10, 2007.

- Carter, “The myth of dangerous human-caused climate change.”

- Even with perfect knowledge of emissions, uncertainties in the representation of atmospheric and oceanic processes by climate models limit the accuracy of any estimate of the climate response. Natural variability, generated both internally and from external forcings such as changes in solar output and explosive volcanic eruptions, also contributes to the uncertainty in climate forecasts. P. A. Stott and J. A. Kettleborough, “Origins and estimates of uncertainty in predictions of twenty-first century temperature rise,” Nature, Vol. 416, 2002, pages 723-726.

- For example, the phrases “It is not yet possible to determine which estimates of the climate change cloud feedbacks are the most reliable,” and “Despite advances since the TAR (Third Assessment Report), substantial uncertainty remains in the magnitude of cryospheric feedbacks within AOGCMs.” (Cryospheric feedbacks are responses of frozen areas of the earth — the world’s Arctic and Antarctic regions, and glaciers — to climate changes. AOGCMs are general circulation models of the ocean/atmosphere make-up and interaction.) The following words and phrases appear at least once in the Chapter: unknown, uncertain, unclear, not clear, disagreement, not fully understood, appears, not well observed, variability, variety, unresolved, not resolved, and poorly understood. David A. Randall et al., “Climate Models and their Evaluation,” in IPCC, Climate Change 2007, page 593. Available at http://ipcc-wg1.ucar.edu/wg1/Report/AR4WG1_Print_Ch08.pdf. Access verified December 10, 2007.

- Ibid., pages 608-609.

- P. G. Allen and R. Fildes, “Econometric Forecasting,” in Armstrong, Principles of Forecasting; J. Scott Armstrong, Long-Range Forecasting: From Crystal Ball to Computer (New York, N.Y.: Wiley-Interscience, 1985); G. T. Duncan, W. L. Gorr and J. Szczypula, “Forecasting Analogous Time Series,” in Armstrong, Principles of Forecasting; D. Wittink and T. Bergestuen, “Forecasting with Conjoint Analysis,” in Armstrong, Principles of Forecasting.

- H. Halide and P. Ridd, “Complicated ENSO models do not significantly outperform very simple ENSO models,” International Journal of Climatology, 2007, in press.

- G. C. Hegerl et al., “Understanding and Attributing Climate Change,” in IPCC, Climate Change 2007. Available at http://ipcc-wg1.ucar.edu/wg1/Report/AR4WG1_Print_Ch09.pdf. Access verified December 10, 2007.

- Armstrong, Principles of Forecasting.

- As noted, the ratings in a forecasting audit involve some judgment. As a check on the auditors’ judgments, the detailed ratings were sent to 240 of the scientists and policymakers surveyed earlier for information on the use of forecasting principles. They were asked to review the paper and to identify any inaccuracies. They were also invited to make their own ratings for publication at publicpolicyforecasting.com. As of this writing, none have done so.

- The authors’ ratings of the processes used to generate the forecasts presented in the IPCC report are provided on the Public Policy Forecasting Special Interest Group Page at forecastingprinciples.com. These ratings were posted in late June 2007, when the authors’ paper was presented at the International Symposium on Forecasting in New York.

- David A. Randall et al., “Climate Models and their Evaluation.”

- Because reliability is an issue with rating tasks, the authors sent out a general request for experts and several colleagues to use the Forecasting Audit Software to conduct their own audits. At this writing, none have done so.

- The final ratings are fully disclosed in the Special Interest Group section of the forecastingprinciples.com site that is devoted to Public Policy (publicpolicyforecasting.com) under Global Warming.

Kesten C. Green is a senior research fellow of the Business and Economic Forecasting Unit of Monash University. He is codirector of forecastingprinciples.com and is a pioneer of methods to predict the decisions people will make in conflict situations such as occur in wars and in business. Dr. Kesten has published in Energy and Environment, Interfaces, Foresight, International Journal of Business and the International Journal of Forecasting. He received a doctor of philosophy degree from Victoria University of Wellington.

J. Scott Armstrong is a professor at the Wharton School, University of Pennsylvania. Dr. Armstrong was a founder of the Journal of Forecasting, the International Journal of Forecasting and the International Symposium on Forecasting. He is the creator of the Web site forecastingprinciples.-com and editor of Principles of Forecasting (Boston, Mass.: Kluwer, 2001), an evidence-based summary of knowledge on forecasting. In 1996, Armstrong was selected as one of the first six “Honorary Fellows” by the International Institute of Forecasters. He received a doctor of philosophy degree from the Massachusetts Institute of Technology in 1968.